Re-reading Royce

Winston W. Royce's article, "Managing the Development of Large Software Systems", is conventionally and mistakenly perceived as the origin of the Waterfall method of Software Development. However, the actual contents of the paper couldn't be more different!

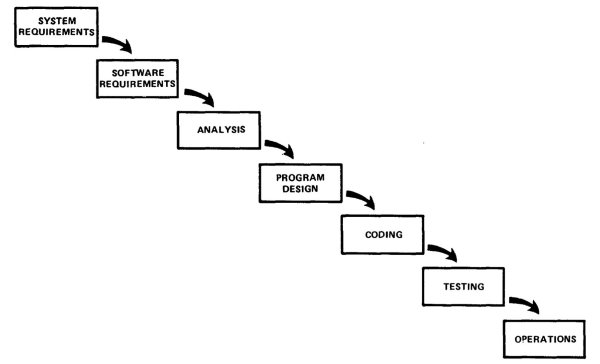

The term 'waterfall' is never mentioned. What is commonly understood to be the 'waterfall' approach - a linear sequencing of activities described in Figure 2 - is described as being "risky and invites failure".

Risk Accumulates

Royce's core observation is that a linear approach accumulates risk throughout the process. Testing, the "phase of greatest risk", occurs at the end. Without mitigation measures, any issues can have a significant impact later in the process.

"The required design changes are likely to be so disruptive that the software requirements upon which the design is based ... are violated."

Royce's approach to mitigating this risk is reducing scope - by incorporating feedback loops throughout the process to "maximize the event of early work that is salvageable and preserved".

The first of these mitigation measures is a "preliminary design phase" in which program designers contribute to the system design before detailed analysis to "impose constraints". Royce intends for the non-functional requirements of a system to provide scope to the solution space, such that the process "will culminate in the proper allocation of execution time and storage resources".

Nowadays, I see similarities to the role of solution architects working early in a project's lifecycle to define a high-level solution design, incorporating factors such as operability, system communication and coherence with organisational standards and governance.

Some of this Sounds A Bit Like XP

Two mitigation measures, if re-written in more modern lingo, are familiar to those who practice Kent Beck's Extreme Programming (XP).

As part of the preliminary program design phase, Royce recommends to "Do It Twice" - essentially, run the project on a smaller scale and throw away the resulting solution, but incorporate the learnings in later phases.

Complexity emerges in software as our understanding of the problem grows. Deliberately exploring solutions through rapid prototyping makes it possible to get an early sense of the trouble spots. Through the prototype (Royce calls it a 'simulation'), one can "perform experimental tests of some key hypotheses".

Throwaway prototypes uncover risk in a controlled way and enable teams to experiment with potential solutions. In XP, these are called Spike Solutions and serve as a means to gather information and increase the reliability of estimates for the actual work.

Moving forward to the last point on customer involvement, the "On-Site" always-available customer is vital to XP. Involving the customer throughout the development of a system ensures that their feedback is incorporated early and often, reducing the risk of building the wrong solution. Often, programmers have created what clients asked for, only for them to be dissatisfied upon delivery. Royce acknowledges this:

"For some reason what a software design is going to do is subject to wide interpretation even after previous agreement. It is important to involve the customer in a formal way so that he has committed himself at earlier points before final delivery."

Documentation Ain't All Bad

Royce advocates for "quite a lot" of documentation, which may seem at odds with the Agile Manifesto valuing "working software over comprehensive documentation", but I'm willing to give Royce some leeway.

Documentation is essential in software development. Code cannot unambiguously describe everything. For instance, Royce suggests documenting decisions, which is eminently sensible. Architectural Decision Records are a modern-day approach which comes to mind.

Some of this section shows its age, particularly around the separation of development and operations. Royce highlights the unsuitability of developers managing their software in live environments:

"Generally these people are relatively disinterested in operations and do not do as effective a job as operations-oriented personnel."

Royce's operating context is that of large-scale software systems in which a 'Thing' is being built. Nowadays, evergreen software is the mainstream operational context, where there isn't necessarily a pre-defined 'Thing' but rather an ongoing, iterative and evolutionary approach to design. In this context, it does make more sense for developers and operations to be closely aligned.

Another point on documentation relates to system specifications:

"During the early phase of software development the documentation is the specification and is the design. Until coding begins these three nouns (documentation, specification, design) denote a single thing".

The relationship between those three nouns brings me to Royce's suggestions about testing.

Back to Testing

Royce understands testing is a continual activity but can't quite say this. He notes earlier stages are

"aimed at uncovering and solving problems before entering the test phase".

Testing, as a fundamental concept, is a means of clarifying assumptions, of gaining shared understanding through experimentation.

Documentation, in the form of rigid specifications, acts as a proxy for shared understanding and provides no guarantees that all consumers will arrive at that same understanding. Methodologies such as Behaviour-Driven Development provide space to arrive at a shared understanding and capture that through executable documentation.

"During the early phase of software development the documentation is the specification and is the design. Until coding begins these three nouns (documentation, specification, design) denote a single thing".

Documentation can serve as a focus. Consider Amazon's six-pagers which provide the top-level overview and raison d'être. I advocate for documentation, but not to the detriment of, or a replacement for, shared understanding.

Royce sees testing as a means of gaining feedback and therefore reducing risk. He advocates for human and technical approaches. Consider the parallels between:

"Every bit of an analysis and every bit of code should be subjected to a simple visual scan by a second party"

and modern-day peer review or pair programming. Likewise,

"Test every logic path in the computer program at least once with some kind of numerical check"

should be familiar to those automated writing programmer tests (although, note 100% code coverage alone is not an indicator of quality).

More Agility Than Previously Advertised

In many respects, this paper shows its age. Computing power has improved significantly in the succeeding half-century, making some previously-prohibitively-expensive activities trivial to perform on a typical developer machine.

The most radical change is the notion that a pre-defined 'Thing' is being built. Instead, we have initial expectations and assumptions about a solution that teams will successively refine based on real-life learnings.

To some extent, I feel guilty about judging an old paper by today's standards - the discipline has evolved. It strikes me that a lot here still applies to so-called "Agile" teams today, despite the paper's age and a conventional misunderstanding of its primary point.

But let's bury the notion that Royce designed and advocated for a 'waterfall' approach.